Kit Wai Chan - Fotolia

How to achieve speedy application response times

Development and operations teams both have a responsibility to ensure fast application response times. Follow this advice to measure and reduce delay.

The software development industry has an obsession with metrics, and most of these metrics fill me with disdain. When it comes to application response times, however, these measurements are useful.

Application response times for software tend to get progressively worse due to code bloat. Yet, customer expectations keep rising. Done right, application response time measurements can prevent user complaints, or even performance crises.

Know application response time standards

What's your benchmark? The human eye can perceive delays that are roughly 0.25 seconds. So, if you reduce page load speed from 0.25 to 0.001 seconds, you'll likely spend money on improvements that no customer even notices.

Beyond 0.25 seconds, we get into the response time for real applications. Some organizations choose to interview customers to set expectations, but Geoff Kenyon, a marketing and SEO expert, once conducted a study that included the following findings about web site response times:

- If your site loads in 5 seconds, it is faster than approximately 25% of the web.

- If your site loads in 2.9 seconds, it is faster than approximately 50% of the web.

- If your site loads in 1.7 seconds, it is faster than approximately 75% of the web.

- If your site loads in 0.8 seconds, it is faster than approximately 94% of the web.

There are dozens of tools to measure page load times, from simple browser plugins to complex cloud-based software that assesses end-to-end performance with site access from a dozen locations.

Try to keep measurements as end-to-end and realistic as possible. System performance is, after all, the sum of all the components in the system. Once you complete your measurements, break them down into latency and response times.

Difference between latency and response time

Latency is the time it takes to move a message from the server to the client. Response time is the time on server.

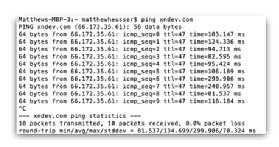

The ping command-line tool takes a webpage or an IP address and sends an echo request over the Internet Control Message Protocol (ICMP). ICMP does not have ports, and will have almost no time on the server. That means the ping time, divided by two, roughly equals the latency time.

In this example of the ping command, note how the round-trip average takes around 134 milliseconds, or around 67 milliseconds per trip.

In real use, however, application response times typically require logs from the server. Developers should wrap the logs with the time-to-process, then make that data available through a searchable big data system. Use this system to run queries for averages and statistics related to application performance.

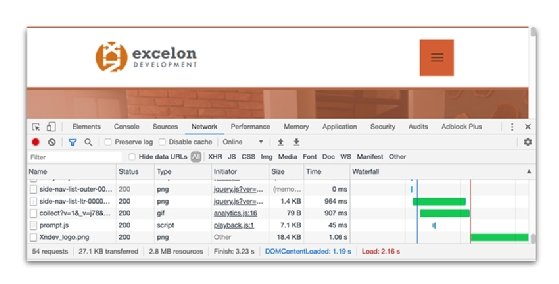

There is another way to gauge response times in Google Chrome. Select the Developer Tools option from the menu. Select the Network tab, then give the browser a hard refresh. You will see a waterfall diagram of image and page element loads.

These tools measure how long it takes for the request to complete a round trip. Take the total time, subtract latency, and you will get the response time. Similar tools exist for mobile devices.

Give applications a performance boost

Once you figure out response time, tune and optimize performance. Generally, the idea is simple: Find the service that causes the greatest or most disruptive delay, then break that down into different time categories, such as on-network, on-web server and on-API. Once you find the slow component, break that down into pieces. Figure out what causes that delay, and fix it. If you can, run the requests in parallel.

If the problem is latency, there isn't a lot for programmers to do, as the issue is infrastructure between the client and the web server. Latency is in the hands of the operations staff.

Most web applications include a great deal of static content -- files that are exactly the same, every time -- mostly in the form of webpages, JavaScript and images. If you serve the images from the data center, one way to improve latency is to move the data center close to internet exchange points. More realistically, a content delivery network (CDN) can place the files in dozens of places all over the internet, then route requests to the closest data center. Operations might also configure the web servers to include entity tags in their responses, so the browser can cache images, instead of reloading them -- that is how the load times fell to 0 milliseconds in the image above.

Lower-complexity images reduce file size, while minified code reduces the size of text files. Over a large number of files, these changes modestly increase overall web application performance.

Some software designers rely on incremental rendering, where page elements load and display piece by piece to create a better user experience even when overall response times don't improve. For example, if you go to Amazon's retail site, you will notice that it renders as a bunch of boxes. If Recently viewed or Recommended for you doesn't render, the site still shows everything else. Just as developers perform incremental work on software projects, you can structure your websites the same way.

What constitutes problematic application response time

Sometimes, metrics don't tell the story. Very few people decide to get a haircut by measuring it with a ruler. More likely, you base that decision on a schedule -- a specific number of weeks or months. Others might make this judgment subjectively when they look in the mirror.

These ideas of time translate to application response times. If I, as a human, can feel a lag in the load, then the lag is probably over 0.5 seconds.

But, even if you feel the lag, it might not matter. If the software is built for an internal, customer service group, load times might not be a priority. Likewise, if the software is new and innovative, with no real competitors, then a delay up to a second might be an acceptable response time -- for now. Once the software has competitors, customers can browse away without a second thought.

User experience matters

As I found out when I started my own firm, there is real business value in telling the customer that you are working on a problem. After all, everything all connects back to the user experience.

One of my customers is currently working on a progress bar. It runs on a timer, which means it has no relation to how long the process actually takes. The bar crosses the screen in 15 seconds, at which point, if the data has not loaded, the system times out. If the elements load in five seconds, the app does not cut to the next screen. Instead, it quickly fills in the progress bar. It might take another 0.5 seconds longer for the progress indicator to push across the screen, but it feels more consistent and believable for the user. That half a second might lead to a better user experience.

Editor's note: This article replaces content that was originally written by Scott Barber in March 2007, before iPhones and Instagram. Matt Heusser furnished the new article in 2019, due to the great change in web and mobile computing that occurred over that span of time.