Agile project management (APM)

What is Agile project management (APM)?

Agile project management (APM) is an iterative approach to planning and guiding project processes. It breaks project processes down into smaller cycles called sprints, or iterations.

Agile project management enables project teams in software development to work quickly and collaboratively on a project while being able to adapt to changing requirements in development. It also enables development teams to react to feedback quickly, so they can make changes at each sprint and product cycle.

Just as in Agile software development, an Agile project is completed in small sections. In Agile software development, for instance, an iteration refers to a single development cycle. Each section or iteration is reviewed and critiqued by the project team, which should include representatives of the project's various stakeholders. Insights gained from the critique of an iteration are used to determine what the next step should be in the project.

Agile project management focuses on working in small batches, visualizing processes and collaborating with end users to gain feedback. Continuous releases are also a focus, as these normally incorporate given feedback within each iteration.

The main benefit of getting started with Agile project management is its ability to respond to issues that arise throughout the course of the project. Making a necessary change to a project at the right time can save resources and help to deliver a successful project on time and within budget.

What is Agile project methodology?

The Agile project methodology breaks projects into small pieces. These project pieces are completed in sprints that generally run anywhere from a few days to a few weeks. These sessions run from the initial design phase to testing and quality assurance (QA).

The Agile methodology enables teams to release segments as they're completed. This continuous release schedule enables teams to demonstrate that these segments are successful and, if not, to fix flaws quickly. The belief is that this helps reduce the chance of large-scale failures because there's continuous improvement throughout the project lifecycle.

How APM works

Agile teams build rapid feedback, continuous adaptation and QA best practices into their iterations. They adopt practices such as continuous deployment and continuous integration using technology that automates steps to speed up the release and use of products.

Additionally, Agile project management calls for teams to continuously evaluate time and cost as they move through their work. They use velocity, burndown and burnup charts to measure their work instead of using Gantt charts and project milestones to track progress.

Agile project management doesn't require the presence or participation of a project manager. Although a project manager is essential for success under the traditional project delivery methodologies -- such as the Waterfall model, where the position manages the budget, personnel, project scope and other key elements -- the project manager's role under APM is distributed among team members.

For instance, the product owner sets project goals, while team members divvy up scheduling, progress reporting and quality tasks. Certain Agile approaches add other layers of management. The Scrum approach, for example, calls for a Scrum Master who helps set priorities and guides the project through to completion.

Project managers can still be used in Agile project management. Many organizations still use them for Agile projects -- particularly larger, more complex ones. These organizations generally place project managers in more of a coordinator role, with the product owner taking responsibility for the project's overall completion.

Given the shift in work from project managers to Agile teams, Agile project management demands that team members know how to work within the framework. They must be able to collaborate with each other and with users. They must be able to communicate well to keep projects on track. And they should feel comfortable taking appropriate actions at the right times to keep pace with delivery schedules.

Agile values and principles

Agile project management is constructed out of four key values and 12 main principles. The four main values help clarify that APM is collaborative and people-oriented, with the goal of creating functional software that delivers value to the end user. These four values are as follows:

- Individuals and interactions should be valued over processes and tools used.

- Creating working software should be valued over producing comprehensive documents.

- Customer collaborations should be valued over negotiating contracts.

- Being able to respond to changes should be valued over following a set plan.

The 12 principles of Agile project management are as follows:

- Early and continuous delivery of software is the highest priority to achieve customer satisfaction.

- Teams must be able to change requirements at any point in the development process, even in late stages.

- Prioritize continuous creation and deployment of working software in short succession.

- Developers must work together with end users and project stakeholders throughout the project.

- Team members need to be motivated to support their surrounding environment.

- Convey information in development teams through face-to-face conversations, if possible.

- Measure progress primarily by progress made on creating working software.

- Developers must maintain a constant pace to continue a sustainable development process.

- Continuous attention should be given to the quality of software to ensure good design.

- Maximize the work done by focusing on simplicity in design.

- Teams must be self-organizing to produce the best software.

- Teams need to reflect on how to become more effective at regular intervals.

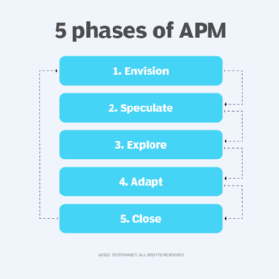

The 5 phases of APM

There are five main phases involved in the APM process:

- Envision. The project and overall product are first conceptualized in this phase, and the needs of the end customers are identified. This phase also determines who is going to work on the project and its stakeholders.

- Speculate. This phase involves creating the initial requirements for the product. Teams will work together to brainstorm a features list of the final product, then identify milestones involving the project timeline.

- Explore. The project is worked on with a focus on staying within project constraints, but teams will also explore alternatives to fulfill project requirements. Teams work on single milestones and iterate before moving on to the next.

- Adapt. Delivered results are reviewed and teams adapt as needed. This phase focuses on changes or corrections that occur based on customer and staff perspectives. Feedback should be constantly given so each part of the project meets end-user requirements. The project should improve with each iteration.

- Close. Delivered results are reviewed and teams adapt as needed. The final project is measured against updated requirements. Mistakes or issues encountered within the process should be reviewed to avoid similar issues in the future.

History of APM

The 21st century saw a rapid rise in the use of the Agile project management methodology, particularly for software development projects and other IT initiatives.

However, the concept of continuous development dates back to the mid-20th century and has taken various forms, championed by different leaders over the decades. For example, there was James Martin's Rapid Iterative Production Prototyping, an approach that served as the premise for the 1991 book Rapid Application Development and the approach of the same name, RAD.

A specific Agile project management framework that has evolved in more recent years is Scrum. This methodology features a product owner who works with a development team to create a product backlog -- a prioritized list of the features, functionalities and fixes required to deliver a successful software system. The team then delivers the pieces in rapid increments.

Additional Agile frameworks include Lean, Kanban and Extreme Programming (XP).

For more on Project Portfolio Management, read the following articles:

Project vs. program vs. portfolio management

Best project portfolio management tools and software

Project portfolio risk management: Learn the key tenets

Benefits of Agile project management

Advocates for Agile project management say the methodology delivers numerous benefits, including the following:

- More freedom. Project management lets designers work on models that use their strengths.

- Efficient use of resources. This enables rapid deployment with minimal waste.

- Greater flexibility and adaptability. Developers can better adapt to and make needed changes.

- Rapid detection of problems. This enables quicker fixes and better control of projects.

- Increased collaboration with users. This leads to products that better meet user needs.

- Differences from other project management methods. APM doesn't require as clearly defined goals and processes at the start of development when compared to traditional project management methods, like Waterfall.

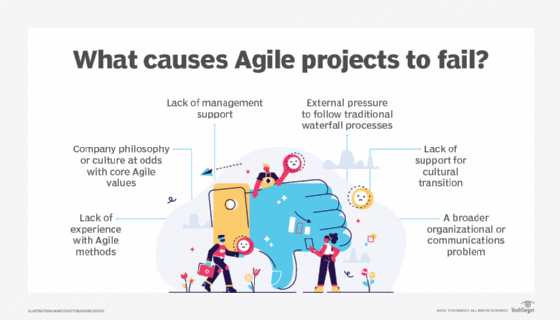

Drawbacks of Agile project management

Agile project management also has some potential drawbacks, including the following:

- Inconsistent results. A project can go off track because there are fewer predetermined courses of action at the start of a project.

- Progress is difficult to measure. Off-track projects lead to less predictable outcomes.

- Time constraints. Agile management relies on making decisions quickly, so it isn't suitable for organizations that take a long time to analyze issues.

- Communication can be challenging. Collaboration between teams or end users must continually happen to make the best possible product.

APM vs. Waterfall

Agile project management was, and remains, a counter to the Waterfall methodology. The Waterfall methodology features a strict sequential approach to projects, where initiatives start with gathering all requirements before the work begins. The next steps are scoping out the resources needed, establishing budgets and timelines, performing the actual work, testing and then delivering the project as a whole when all the work is complete.

In response to what were recognized problems in that approach, 17 software developers in 2001 published the Agile Manifesto outlining 12 principles of Agile software development. These principles continue to guide Agile project management even today.

Examples of Agile project management

Teams often pick one or two of the following APM methods to implement:

- Scrum. Scrum is a framework for project management that emphasizes teamwork, accountability and iterative progress toward a well-defined goal. The framework begins with a premise of what can be seen or known. After that, teams track progress and tweak the process as necessary. Scrum includes processes such as daily scrums, sprints, sprint planning meetings, sprint reviews and sprint retrospectives. Scrum roles include product owner, Scrum Master and Scrum development team. These roles support the three pillars of Scrum: transparency, inspection and adaptation.

- Extreme Programming. XP is an Agile software method that emphasizes the improvement of software quality through frequent releases in short development cycles, introducing checkpoints for adopting new customer requirements. XP focuses on development practices, such as expecting changes, programming in pairs, code clarity, code review and unit testing, as well as not programming features until they're required.

- Feature-driven development. FDD is a customer-centric software development methodology known for short iterations and frequent releases. Although it's similar to Scrum, FDD requires the project business owner to attend initial design meetings and iteration retrospectives. The FDD process includes developing a model, building a list of features, planning out each feature, and designing and building each feature.

- Lean software development. Lean software development is a concept that emphasizes optimizing efficiency and minimizing waste in the software development process. The process includes eliminating waste, building quality, promoting learning, delaying commitments to experiment and optimizing the process.

- Adaptive software development. ASD is an early iterative form of Agile software development that focuses on continuous learning throughout the project as opposed to sticking to a set plan. ASD has a speculation or planning phase, a collaboration phase and a learning phase.

- Kanban. Kanban is a visual system used to manage and keep track of work as it moves through a process. In manufacturing, Kanban starts with the customer's order and follows production downstream. Work items are represented on a Kanban board, enabling team members to see every piece of work at once.

Discover 10 valuable benefits of Scrum and tips on how to achieve them.