cloud testing

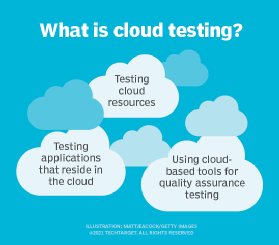

What is cloud testing?

Cloud testing is the process of using the cloud computing resources of a third-party service provider to test software applications. This can refer to the testing of cloud resources, such as architecture or cloud-native software as a service (SaaS) offerings, or using cloud tools as a part of quality assurance (QA) strategy.

Cloud testing can be valuable to organizations in a number of ways. For organizations testing cloud resources, this can ensure optimal performance, availability and security of data, and minimize downtime of the associated infrastructure or platform.

Organizations test cloud-based SaaS products to ensure applications are functioning properly. For companies testing other types of applications, use of cloud computing tools, as opposed to on-premises QA tools, can help organizations cut down on testing costs and improve collaboration efforts between QA teams.

Types of cloud testing

While cloud testing in broad terms refers to testing applications through cloud computing resources, there are three main types of cloud testing that vary by purpose:

- Testing of cloud resources. The cloud's architecture and other resources are assessed for performance and proper functioning. This involves testing a provider's platform as a service (PaaS) or infrastructure as a service (IaaS). Common tests may assess scalability, disaster recovery (DR), and data privacy and security.

- Testing of cloud-native software. QA testing of SaaS products that reside in the cloud.

- Testing of software with cloud-based tools. Using cloud-based tools and resources for QA testing.

Benefits of cloud testing

Here are some of the primary benefits associated with cloud testing:

- Cost-effectiveness. Cloud testing is more cost-efficient than traditional testing, as customers only pay for what they use.

- Availability and collaboration. Resources can be accessed from any device with a network connection. QA testing efforts are not limited by physical location. This, along with built-in collaboration tools, can make it easier for testing teams to collaborate in real time.

- Scalability. Compute resources can be scaled up or down, according to testing demands.

- Faster testing. Cloud testing is faster than traditional testing, as it circumvents the need for many IT management tasks. This can lead to faster time to market.

- Customization. A variety of testing environments can often be simulated.

- Simplified disaster recovery. DR efforts for data backup and recovery are less intensive than traditional methods.

Challenges with cloud testing

Cloud testing has its drawbacks. A lack of standards around integrating public cloud resources with on-premises resources, concerns over security in the cloud, hard-to-understand service-level agreements (SLAs), and limited configuration options and bandwidth can all contribute to delays and added costs. Here are some of the broad challenges associated with the use of cloud testing:

- Security and privacy of data. As with broader use of the cloud, security and privacy concerns linger with cloud testing. In addition, as the cloud environment is outsourced, the customer loses autonomy over security and privacy issues.

- Multi-cloud models. Multi-cloud models that use different types of clouds -- public, private or hybrid -- sometimes across multiple cloud providers, pose complications with synchronization, security and other domains.

- Developing the environment. Specific server, storage and network configurations can lead to testing issues.

- Replicating the user environment. Though the application, ideally, would be tested in a similar environment to that of end users, it is not always possible to avoid discrepancies.

- Testing across the full IT system. Cloud testing must test the application, servers, storage and network, as well as validate these test interactions across all layers and components.

- Test environment control limitations. Aspects that should be tested may be beyond what the test environment can control.

- Potential bandwidth issues. Bandwidth availability can fluctuate due to the provider's resources being shared with other users.

Examples of cloud testing

Cloud test environments can be used to perform a broad range of functional and nonfunctional tests for customers. Here are some examples of software tests often conducted in cloud environments:

- Functional testing. Includes smoke testing, sanity testing, white box testing, black box testing, integration testing, user acceptance testing and unit testing.

- System testing. Tests application features to ensure they are functioning properly.

- Interoperability testing. Checks that application performance is maintained across changes made to its infrastructure.

- Stress testing. Determines the ability of applications to function under peak workloads while staying effective and stable.

- Load testing. Measures the application's response to simulated user traffic loads.

- Latency testing. Tests the latency time between actions and responses within an application.

- Performance testing. Tests the performance of an application under specific workloads and is used to determine thresholds, bottlenecks and other limitations in application performance.

- Availability testing. Ensures an application stays available with minimal outages when the cloud provider makes changes to the infrastructure.

- Multi-tenancy testing. Examines if performance is maintained with additional users or tenants accessing the application concurrently.

- Security testing. Tests for security vulnerabilities in the data and code in the application.

- Disaster recovery testing. Ensures cloud downtime and other contingency scenarios will not lead to irreparable damages, such as data loss.

- Browser performance testing. Tests application performance across different web browsers.

- Compatibility testing. Tests application performance across different operating systems (OSes).

Cloud testing vs. on-premises testing for applications

Compared to a traditional on-premises testing environment, cloud testing offers users pay-per-use pricing, flexibility and reduced time to market. The test processes and technologies used to perform functional testing against cloud-based applications are not significantly different than traditional in-house applications, but awareness of the nonfunctional risks around the cloud is critical to success.

For example, if testing involves production data, then appropriate security and data integrity processes and procedures need to be in place and validated before functional testing can begin. Furthermore, cloud testing can be undertaken from any location or device with a network connection, as opposed to testing on premises, which must take place on site.

How to test in the cloud

Before testing in the cloud, it is important to determine which cloud testing tools and services are the correct fit for the organization. One approach to cloud testing includes the use of specific tools for individual tests, such as performance testing, load testing, stress testing and security.

Another option is for organizations to use complete, end-to-end testing as a service (TaaS) products. While outsourcing to TaaS vendors may help organizations reduce costs, testing times and IT management workloads, it might not be the best fit for organizations that require in-depth expertise of a unique infrastructure or very specific types of tests.

For more in-depth information on building the right cloud testing strategy for your organization, click here.

Weigh your options

Cloud testing can refer to testing for a few different purposes -- either assessing how functions of an IaaS or PaaS offering work, how an in-cloud SaaS application works or using cloud tools to augment a QA strategy.

While cloud testing can benefit organizations through improved costs, flexibility, testing speeds and collaborative potential, there are still challenges inherently associated with the use of the cloud.

When considering different testing methods, businesses should make it a priority to find the right software testing methods to fit their organizational needs. If the business determines that a cloud testing method is the best fit, they should weigh options for cloud testing tools and techniques, depending on which type of cloud testing they are pursuing, as well as technical details of their applications and cloud infrastructure.