WavebreakmediaMicro - Fotolia

What is the difference between SIT and UAT?

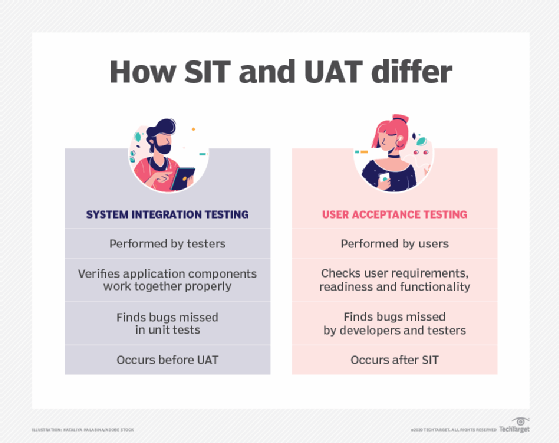

User acceptance testing and system integration testing differ in one key way: the person who does the testing. Learn when to apply UAT vs. SIT.

Not all types of software testing are alike. Some testing scenarios even require different perspectives to gauge whether software has met the mark. Such is the case with user acceptance testing, which differs quite a bit from a technical approach, like system integration testing.

There are no industry standards for user acceptance testing (UAT) or system integration testing (SIT). With UAT, people who use the system or establish the product requirements conduct the tests. UAT can vary greatly based on the person's experience with the application and experience with testing itself.

With SIT, a QA team evaluates whether applications -- and their many components -- function as intended before it goes to the client. Isolated tests, such as unit tests, often fail to catch bugs that SIT uncovers.

The primary difference between SIT and UAT comes down to their vastly different test objectives and the testers' experience. Time is a factor in both UAT and SIT scenarios. Testers need to know what the difference between SIT and UAT is, what each assessment brings to software quality and who verifies each test. Read along to learn more about these testing processes.

What is UAT?

With user acceptance testing, the development organization gives the build or release to the target consumer of the system, late in the software development lifecycle (SDLC). Either the end users or that organization's product development team perform expected activities to confirm or accept the system as viable. The UAT phase is typically one of the final steps in an overall software development project.

Some types of UAT include the following:

- Alpha and beta testing. With alpha testing, users or user groups test software early in the SDLC to identify bugs during development. Organizations release the product to the customer's environment in beta testing, which garners more feedback and improves quality but comes after the software team has done more work following alpha tests.

- Black box testing. The user does not see the internal code or application components, which is where the term black box stems from. Users must gauge how well the product meets requirements based on their interaction alone. Black box testing is also a type of functional testing.

- Contract acceptance testing. This type of UAT occurs when a contract between the vendor and the user defines criteria and specifications for a product. With contract acceptance testing, the user verifies that the software meets the agreed-upon criteria.

- Operational acceptance testing. The client organization determines its readiness to use the software, gauging criteria like employee training, software maintenance and how it will handle failure.

- Regulation acceptance testing. Also called compliance acceptance testing, this process verifies that the software meets the users' regulatory or compliance needs.

One drawback of UAT is that it leaves users little time to test before the product launch. If users do find defects, they might feel pressure to accept the software as is rather than delay a project. In some cases, clients wait months for the software and are anxious to receive it and start working in it to meet business needs. These dynamics pressure users to accept the software under test, even if defects prevent or inhibit functionality.

Another drawback of UAT is that users often don't know how to properly test. QA engineers learn techniques that help them vet software. Users often arrive at the keyboard without any knowledge of how to test the software -- even if there are requirements in place. Those users are prone to execute happy path testing -- not more involved test cases or interesting test conditions -- either due to inexperience or the lack of time to effectively carry out different scenarios.

Users should try to understand the specific project dynamics and determine what's adaptable and logical to request from developers. If you do not believe users can effectively handle UAT, commission outside help with the task.

What is SIT?

System integration testing, also called integration testing, evaluates that all software components -- hardware and software -- work as expected in a complete system. Software QA teams conduct SIT, not users.

Testers who evaluate functionality as it's delivered are usually prepared to also check application functionality as a whole, integrated solution. SIT is often a more technical testing process than UAT. Testers design and execute SIT, as they've become familiar with the types of defects common in the application throughout the SDLC.

The SIT phase precedes UAT. Because the technical expertise between users and testers varies significantly, the two demographics are likely to find vastly different defects between UAT and SIT. SIT often uncovers bugs unit tests didn't catch -- defects that rely on a workflow or interaction between two application components, such as a sequential order of operations.

A little overlap between SIT and UAT might be desirable, as a second check of app functionality. However, the fact that both SIT and UAT occur late in the SDLC process, close to release, is often the only common element between the two forms of testing.