WavebreakmediaMicro - Fotolia

How to manage and maintain automated UI tests

Examine how teams can navigate the obstacles associated with automated UI tests -- including maintenance, debugging, change management and business logic challenges.

As recently as 20 years ago, many experts agreed that UI tests were so hard that they directly opposed using them. Yet today, the return on investment for these tests has improved enough that they are commonly used in the enterprise -- if a team takes the right steps.

Automated UI tests have great value because they mimic the user experience, run end-to-end and tie together every component and subsystem. Teams need to properly align and execute every component and sub-system for them to work. However, UI tests are difficult to set up, sometimes hard to write, slow to run and brittle. These limitations lead to false errors and higher maintenance costs.

To reap the potential rewards of UI tests, team members should plan for testability, create a library level that maps user flows to interactions and design a process so that tests automatically pop out of each story as part of the process.

Why automated UI tests are hard to maintain

At the end of every manual test case is the hidden expected result of "… and nothing else odd happened, but the expected things did." It's particularly challenging to write UI tests that evaluate this result.

In addition to the assertion/validation problem, UI tests need to combine all the components, end-to-end. Whereas a unit test might require lines of code in the same file, a UI test needs the source code, libraries, databases, API, network, browser and the client computer. UI tests require a team to set up at least two independent systems that are connected over a network, such as a test server and a client or demand server controlled by another computer program.

Debugging automated UI tests

When an automated UI test fails -- and it most likely will at some point -- a team member will need to rerun the test to see if it was a true failure or if a code change caused the failure because the code didn't work as expected. As such, teams should treat the first run of an automated UI test more like automated change detection instead of a true test.

Test teams should avoid the dreaded and costly loop associated with a UI test failure. For example, if an automated UI test fails, the tester could end up following these steps in hopes of finding an answer:

- Setup

- Test

- Check failures

- Rerun

- Debug

- Fix the test (or code)

- Return to setup

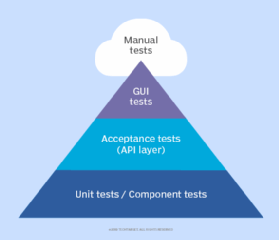

Unit tests, however, don't have these sorts of issues. Solid unit tests can have a dramatic positive effect on reliability. In the early 2000s, Mike Cohn, along with other experts such as Lisa Crispin, suggested that teams shift their emphasis to unit tests. The model Cohn suggested was the test automation pyramid.

Be aware that the pyramid was defined at a time before Selenium existed; tools at the time were based on Windows Desktop Client Applications -- sometimes called Win32 -- and relatively clunky.

Nowadays, things have changed a bit. Most teams have some amount of unit test coverage and GUI tools are much better suited to these needs. However, test automation is still an area of concern, and test maintenance continues to be tricky.

Technical ways to reduce maintenance cost

Most GUI test automation projects start off entirely backwards. The non-technical testing staff first creates GUI tests from the top of the pyramid. But programmers created the UI to make their job easier. Elements such as textboxes, checkboxes and buttons might have unique identifiers in the code that the software understands, or no codes at all. The programmers might tie back to objects in a database, timestamps or other things that the GUI tools know nothing about. As a result, these GUI tests won't run properly, and the overall program test won't be successful.

One way to reduce maintenance costs is to add testability. For example, code elements should have named IDs that don't change, even if the text is translated into a different language. Additionally, the software itself can have setup and teardown routines exposed at the command line level. Writing data populate and setup routines at the command line level allows all test setup to be loaded from a test file in a second or two. The alternative of filling in data through the tests themselves creates redundant and overlapping tests that are expensive to write, slow to run and harder to debug.

To test repeatedly end-to-end, testers could create their own staging environment with the right version of the code and populate it with data -- perhaps importing a standard file with known test data. Or teams could take things that won't be tested, such as users, pages, groups and permissions, and make them drivable by API or command line function.

Once the other pieces of setup are automated, test automation design comes into play. The idea is to break the test into a small bit of functionality. DOM to database is a QA approach that involves very small tests that test only one thing at a time; as a result, these tests run faster and require less debugging. If a micro-feature-test takes 30 seconds to fail, then it might take only 10 minutes to make it debug, have someone fix the code and rerun the cycle. The tests shouldn't have any interdependencies so they can run in parallel or random order.

Teams can also try to build up a test command library with generic commands such as login, search, tag, create page (with given title, body of attachment) and more. When the interactions required for login change, the library needs to change in just one page, not 50. This step is different than the setup code because although it slowly runs through the browser, it functions like a code library -- a series of functions where the variables such as user-ID are passed in.

Process tricks to reduce maintenance cost

A powerful trick to improve GUI test automation maintainability is to pull it into the development stage. The team should treat stories as incomplete until the automated tests run. In that world, the automated tests become a visual demonstration of working software. Without it, testers who need to make changes will always compete with the fixing-work-for-a-story (that is already "done") and the "current work."

That rule about "the story isn't done" also applies to breaking changes. When programmers make a change that breaks other stories, the tests need to run under continuous integration, which traces the breaking change back to a commit. Be aware that it is not enough to have the tests for a feature pass, as the change introduces a regression elsewhere. The feature is not completed until it is integrated with the existing test suite for the entire application, and all the tests continue to pass.

These changes bring test maintenance into development and give the task to programmers, not to someone else further down the line. While this shift creates extra work for programmers when tests break, it provides them the incentive to find ways to write better tests or make less-intrusive changes.

How to change automated UI tests

All these changes start at the feature level, sometimes called the "story." Developers and testers need to collaborate to create a shared understanding of what tests will run before a programmer writes the code. In doing so, it adds the crucial element of testability as a requirement of the feature and makes those requirements a discovery process. Here, developers, testers and even product owners can work together to figure out the best means to build their product and not just create more work for each other.

After the team writes sanity or smoke tests, it generally makes sense to pivot and develop tests that match the new features. After all, these new software features are the most likely to have changes that break things.

Many teams neglect to account for the extent to which they want test automation included. For example, how many checks will be institutionalized as a process with the automation and then run regularly? It will likely be much less than what a human explores, and instead work as a sort of living documentation, or an example of what the software should do. GUI tests might not be the best format for business rules, but they are better than nothing, and certainly work for examples of user interaction at the UI level.

If the team knows the test IDs enough in advance and the examples are clear, it is possible for the testers to create the tests before the production code exists. The programmers can then write the code to satisfy the tests, changing the tests if need be. Once the tests pass, the code can be demonstrated visually, and the feature is ready for human exploration. Few teams can accomplish this, and some might not want to, but it's a good example of the kind of collaborative work that leads to more maintainable code.

Finally, I should mention avoiding premature automation. If a UI is undergoing radical change, it might be a good idea to step back, doing very little UI automation, while capturing the user flows, to create tests later.

That leaves one more option -- separating the business logic from the UI.

Separate the business logic from the UI

That pyramid has a middle layer, an integration layer, or more commonly called the API layer. It hits the database and comes back much more quickly than a web browser and with fewer moving parts.

For example, in an insurance application, the GUI test might need to poke the system to make sure a simple call returns the correct result all the way through. The tools might also test some error conditions in the UI. However, outside of these two brief examples, the software is likely to have dozens or hundreds of combinations of discounts and costs. Once it's clear that the low-level system will accurately receive all the flags and send the right answer, it can be helpful to isolate and test just the calculation engine.

Those dozens, hundreds or perhaps thousands of tests could run in a tenth of a second each, instead of perhaps 10 seconds each. It might be possible to express the tests as something like a spreadsheet for a visual example of business rules. That makes API tests something that business users can understand as a living documentation of their own business rules.